I set out to build Treso, a secure and modern expense tracking REST API.

I wanted to go beyond simple CRUD apps and dive deep into real-world backend engineering practices including secure authentication, containerization, and deploying to the cloud.

This post is a summary of my key learnings and insights not a step-by-step tutorial. If you’re curious about the code, check out my GitHub repo here.

💡 Why This Project?

- Practice professional backend skills: Not just code that “works,” but code that’s production-ready and maintainable.

- Experiment with modern Spring Boot features: Security, validation, OpenAPI docs, metrics.

- Learn cloud deployment: Run my app on AWS EC2 like real-world services.

- Master Docker: Build once, run anywhere, test with real databases.

🌱 Learnings from Spring Boot

1. Secure Authentication with JWT

- Spring Security isn’t just about “login” it’s a whole framework for role-based access, request filtering, and best practices.

- Implementing JWT taught me about:

- Stateless session management

- Custom authentication filters

- Using

@ControllerAdvicefor clean error responses

2. Data Validation and Exception Handling

- Using Bean Validation (

@Valid,@NotNull, etc.) makes your API more robust and self-documenting. - A single global exception handler gives clients clear, consistent error messages no more scattered try/catch blocks.

3. Building for Scale and Maintainability

- DTOs and service layers are worth it, even in a small app.

- Using JPA with clear entity relationships (user ↔ expenses) made future feature expansion (analytics, sharing, etc.) much easier.

🐳 Docker: Development and Deployment

- Multi-stage Dockerfiles are a game changer: fast, clean images without leftover build tools.

- Environment variables (12-factor style) let me use the same image everywhere from local dev to cloud.

- Running both the app and Postgres in containers (with Docker Compose) gave me confidence my code would “just work” anywhere.

Tip: Debugging Docker networking taught me a lot about how containers talk to each other localhost inside a container is not the same as on your laptop!

☁️ Cloud Deployment with AWS EC2

- Spinning up an Ubuntu EC2, installing Docker, and running my container felt like crossing into “real backend engineering.”

- Learning about AWS Security Groups (cloud firewalls) and how to open ports was new and crucial.

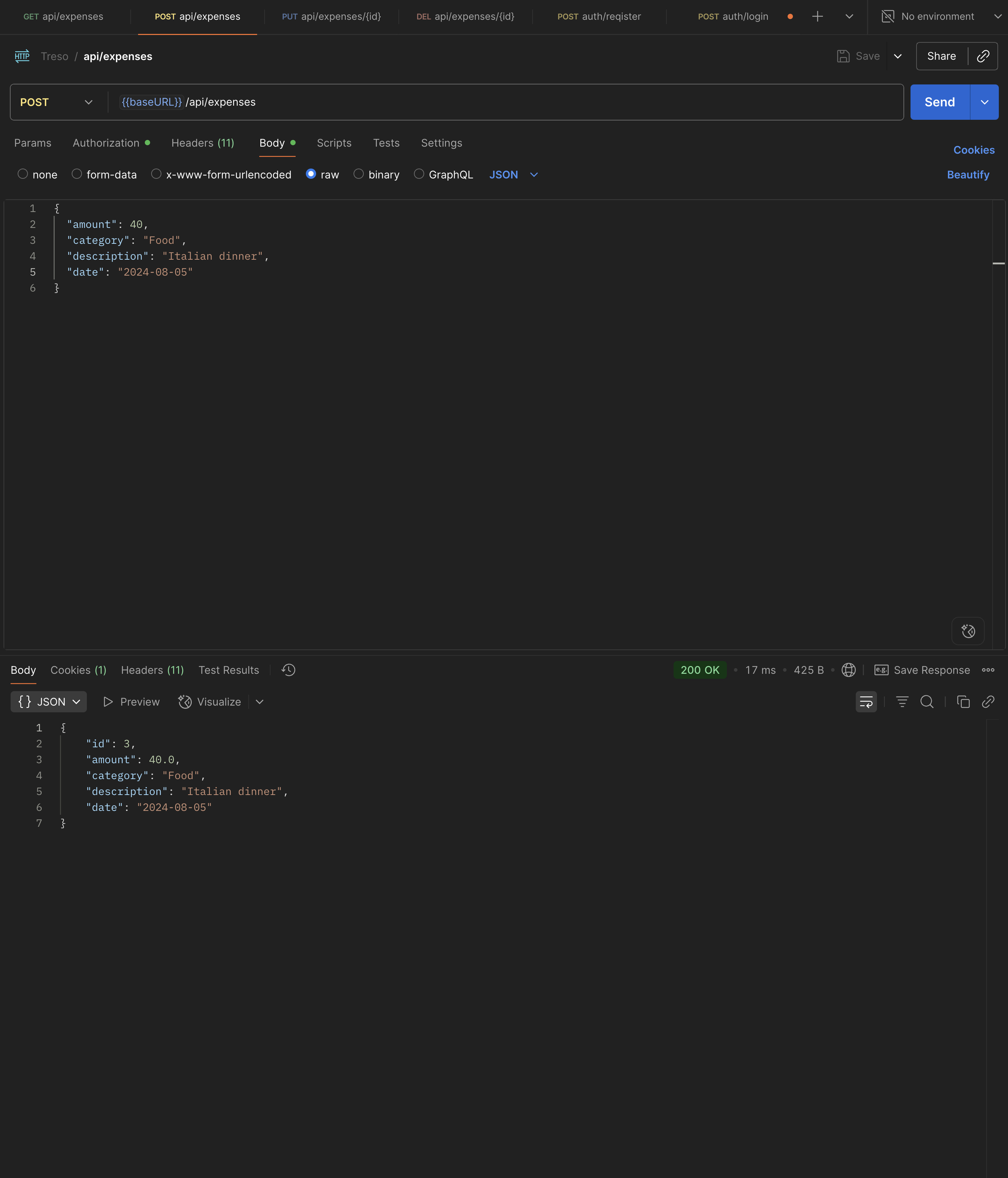

- Seeing my API live on a public IP and then testing with Postman was hugely satisfying.

🔍 Observability and API Docs

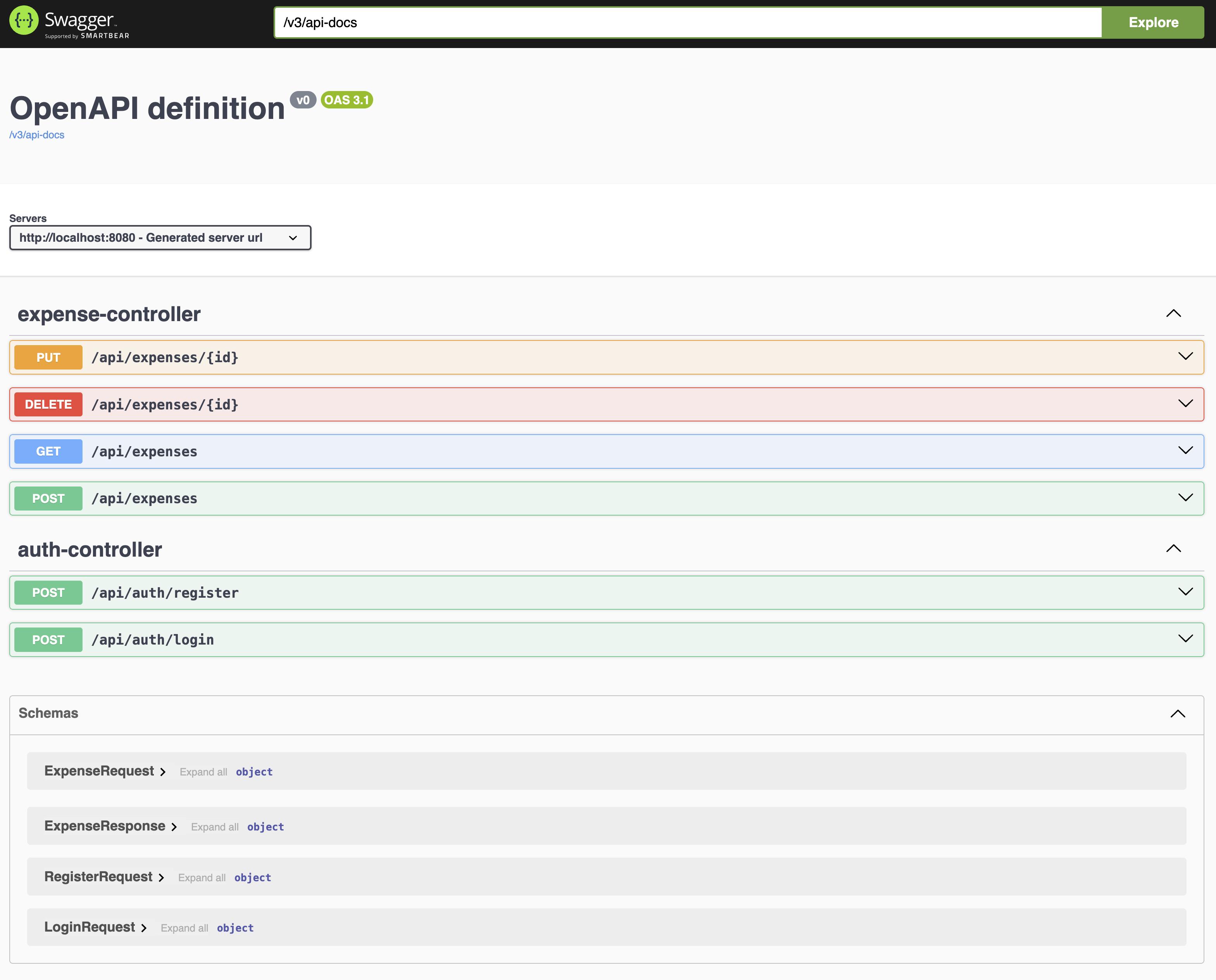

- Adding Springdoc OpenAPI made it effortless to generate and share live API docs.

- Actuator and Micrometer exposed health and metrics endpoints skills I see in real job listings all the time.

🎯 Key Takeaways

- Spring Boot is “enterprise” for a reason: so many best practices are built in.

- Docker is essential for real-world deployment you’re not done until it runs in a container!

- Cloud (AWS EC2): The jump from “it works on my laptop” to “it works in the cloud” is all about automation, configuration, and security.

- Documentation, error handling, and security matter as much as features.

⏭️ What’s Next?

- I plan to add analytics endpoints, S3 file uploads, and a CI/CD pipeline in Phase 2.

- If you want to see the code or try it yourself, visit the GitHub repo.

📝 Final Thoughts

Building Treso was more than a coding exercise it was an experiment in learning how professional backends are built, shipped, and deployed.

I’m excited to take these skills into future projects and internships!

Thanks for reading! If you have questions or want to connect, reach out on LinkedIn.